✨ Powered by your own language model prompt. Ideal for dynamic and context-aware hint generation.

🔍 What It Does

Instead of defining static hint options, this action asks the assistant to generate relevant choices using a custom prompt. The output can vary based on the conversation, user input, or session parameters.🔎 Perfect for smart search suggestions, personalized onboarding, or adaptive FAQ prompts.

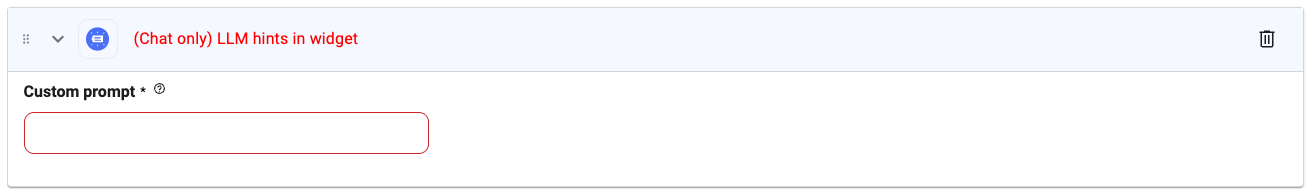

🖼️ Action Interface

⚙️ Configuration Options

Custom Prompt (required)

Custom Prompt (required)

Type:

string

The custom instruction for the language model to follow when generating hints.Example: Suggest 3 common follow-up questions based on the user’s last message.🧵 Tips

- Use conversational context with

@parametersin the prompt. - Keep the prompt direct and focused on generating options (e.g., questions, intents, next actions).

- Useful when different users might need different help pathways.